Strategies for Managing Peak Loads:

Managing peak loads is a central challenge for IT architectures. To maintain good performance during increasing traffic, it is often necessary to rethink and adjust the architecture. Below, we explore some of the key approaches and principles to efficiently handle peak loads.

Scaling Up vs. Scaling Out

Vertical Scaling (Scaling Up)

This approach involves switching to more powerful machines, utilizing increased computing power, memory, or storage capacity in a single system.

Horizontal Scaling (Scaling Out)

This approach distributes the load across multiple smaller machines that operate in a “shared-nothing” architecture, meaning each machine functions independently.

Using high-end machines can be expensive, especially for very intensive workloads. Therefore, horizontal scaling is often the preferred choice. In practice, a combination of both approaches typically provides the best solution.

Elastic vs. Manual Scaling

Elastic Systems

These systems automatically add computing resources when the load increases. They are especially useful for unpredictable peak loads, as they can respond quickly.

Manual Scaling

In this approach, humans analyze and decide when to add additional machines. Manual systems are often easier to manage and present fewer operational surprises.

Both approaches have advantages, and the choice depends on specific requirements and the predictability of the load.

Stateless vs. Stateful Services

Stateless Services

These can be relatively easily distributed across multiple machines. Each node can operate independently of the state of the other nodes.

Stateful Systems

In contrast, stateful systems are more complex to distribute as the system state must be synchronized across multiple machines.

Historically, databases were often kept on a single node until increasing costs or high availability demands necessitated distribution. However, with advancements in distributed system tools and abstractions, distributed data storage may become the norm even for smaller applications.

Architectures Tailored to Specific Applications

There is no universal solution for a scalable architecture. Scalability challenges vary greatly depending on read and write volumes, data size, data complexity, response time requirements, and access patterns.

For example, a system processing 100,000 requests per second (each 1 KB) differs fundamentally from one handling three requests per minute (each 2 GB), even if the total data volume is similar.

Assumptions and Load Parameters

Scalable architectures rely on assumptions about which operations are frequent and which are rare. Incorrect assumptions can lead to unnecessary development efforts or even counterproductive outcomes. In the early stages of startups, it is more important to develop and test new product features quickly than to prepare for hypothetical future peak loads.

General Building Blocks

Scalable architectures leverage general building blocks arranged in well-known patterns. These blocks enable systems to be designed robustly and scalably using proven methods:

Load Balancing

- Strategy: Distributes incoming traffic across multiple servers to prevent overloading individual machines.

- Advantage: Increases system availability and performance.

- Example: Using load balancers like NGINX or HAProxy.

Caching

- Strategy: Temporarily stores frequently accessed data to reduce direct database queries.

- Advantage: Speeds up data access times and reduces backend load.

- Example: Using caching systems like Redis or Memcached.

Auto-Scaling

- Strategy: Automatically adds or removes computing resources based on the current load.

- Advantage: Optimizes resource usage and costs through demand-driven scaling.

- Example: Leveraging cloud services like AWS Auto Scaling or Google Cloud Autoscaler.

Microservices Architecture

- Strategy: Breaks a monolithic application into smaller, independent services with specific tasks.

- Advantage: Enhances flexibility and scalability through independent services.

- Example: Implementing an e-commerce system with separate microservices for orders, payments, and user management.

Circuit Breaker Pattern

- Strategy: Introduces a mechanism that isolates faulty parts of a system to prevent cascading failures.

- Advantage: Increases stability and prevents errors from spreading across the system.

- Example: Using a circuit breaker to monitor microservices and prevent further requests in case of failures.

Asynchronous Processing

- Strategy: Processes tasks asynchronously to improve response times and evenly distribute load.

- Advantage: Reduces user wait times and optimizes resource utilization.

- Example: Using message queues like RabbitMQ or Kafka.

Monitoring and Health Checks

- Strategy: Continuous monitoring and regular system health checks to detect problems early.

- Advantage: Improves uptime and enables proactive maintenance.

- Example: Implementing monitoring solutions like Prometheus, Grafana, or Datadog.

Redundancy and High Availability

- Strategy: Implementing redundant systems and high-availability solutions to minimize downtime.

- Advantage: Increases system stability and ensures continuous availability.

- Example: Using clustering and replication for critical components like databases and web servers.

Immutable Infrastructure

- Strategy: Designing infrastructure such that servers or containers are replaced rather than modified during changes.

- Advantage: Reduces the risk of configuration errors and simplifies infrastructure management.

- Example: Using Infrastructure as Code (IaC) tools like Terraform or AWS CloudFormation.

Bulkhead Pattern

- Strategy: Dividing a system into isolated parts (bulkheads) to limit the impact of failures.

- Advantage: Increases resilience by ensuring errors in one part of the system do not affect the whole.

- Example: Separating functions such as authentication and database access into different services or containers.

Conclusion

Managing peak loads requires careful planning and the selection of appropriate strategies. A deep understanding of your application’s specific requirements is critical to finding the right balance between vertical and horizontal scaling, elastic and manual scaling, and the use of stateless versus stateful services. With the right approaches, systems can be developed that not only meet today’s demands but also adapt to future growth.

What is API Management?

What is API Management? In today’s digital world, APIs (Application Programming Interfaces) are the …

Microservices Model

1,058 words, 6 minutes read time.

Enterprise Application Integration (EAI)

1,165 words, 6 minutes read time.

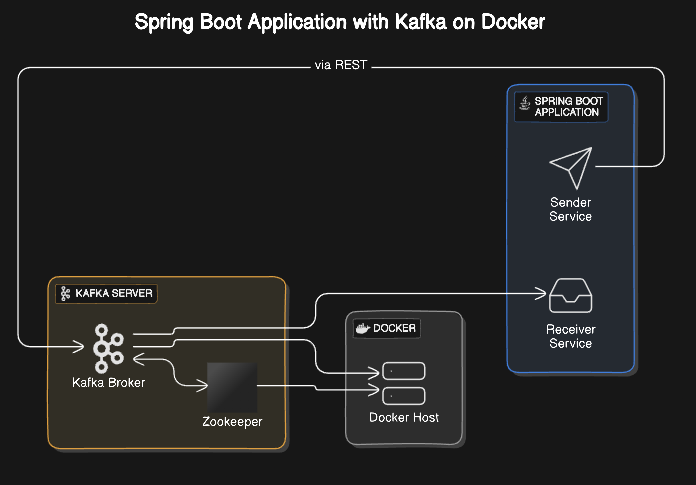

Spring Boot – Kafka – Docker

905 Wörter, 5 Minuten Lesezeit.

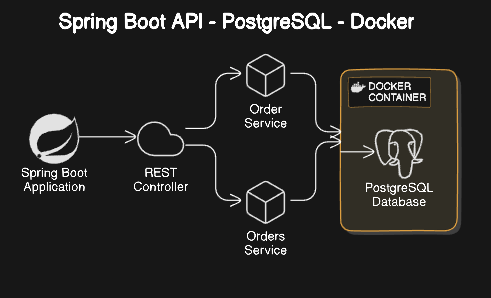

Spring Boot API – PostgreSQL – Docker

1,163 words, 6 minutes read time.

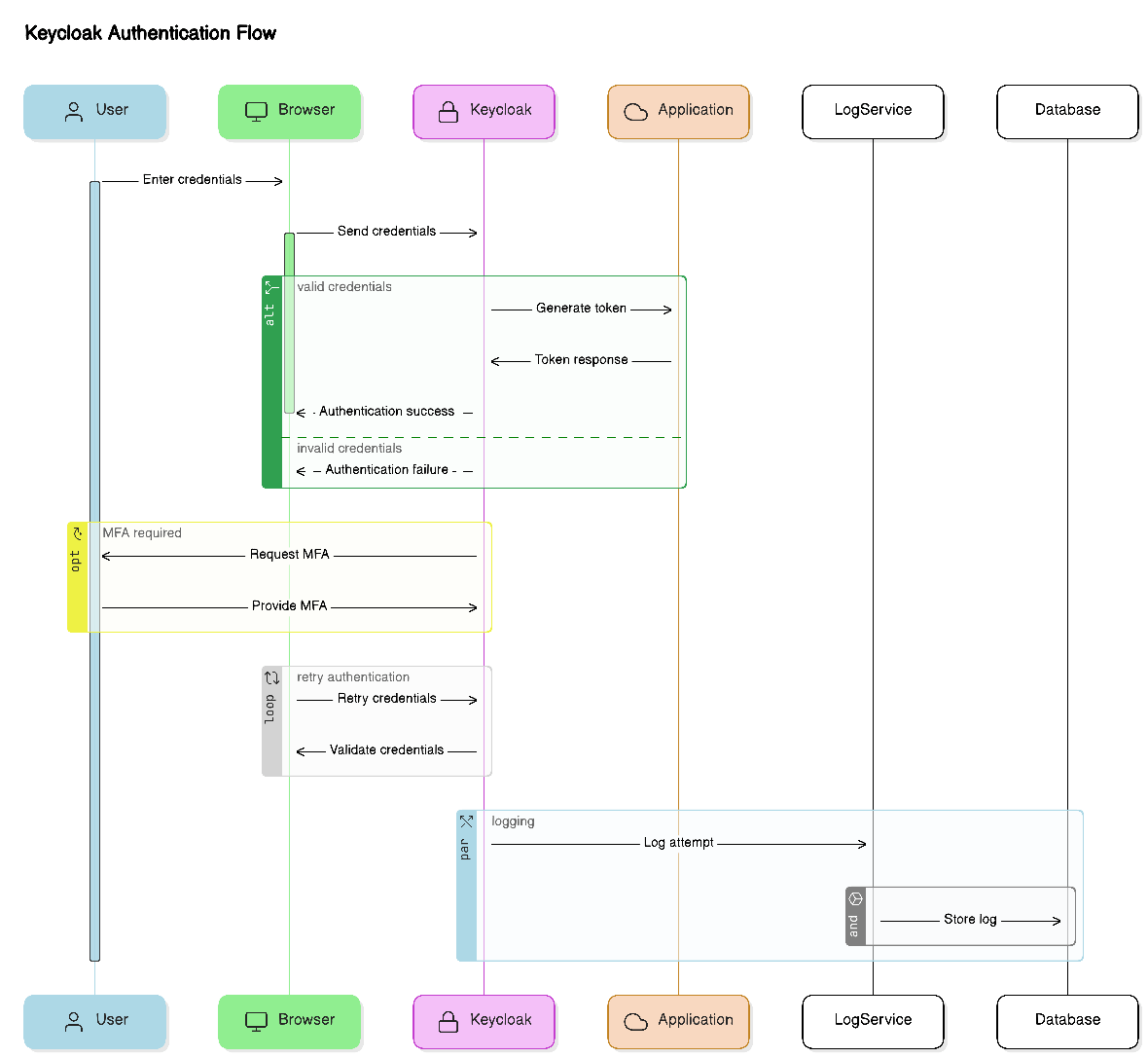

Keycloak and IT Security

970 words, 5 minutes read time.